REVIEW: The Variational Principles of Mechanics, by Cornelius Lanczos

The Variational Principles of Mechanics, Cornelius Lanczos (University of Toronto Press, 1949).

While sailing a little boat the other day, I thought of a new way to troll the Aristotelians. I love it when my hobbies converge like that, and if the second one sounds a little mean-spirited, well, remember that they deserve it.

If humanity has learnt anything in the past few thousand years, it’s to be wary of playing language games with words signifying abstract concepts, and then telling yourself that you’ve discovered something about the things signified. The danger is most strikingly apparent for anything involving infinities, where all but the most extreme epistemic bomb-defusal approaches will quickly lead you to paradox and absurdity.1 But it’s also true of a notion like causality, which turns out to be a good bit more slippery and subtle than the ancients gave it credit for. I don’t blame Aristotle for this, he didn’t know any better after all. He shared in that youthful exuberance and innocence that philosophy had when the world was still young. But his modern-day fans? They ought to know better.

Anyway, there I was, zipping over the waves on a beam reach, when I came up with this syllogism:

The cause of a sailboat’s motion is the wind.

Therefore a sailboat cannot sail faster than the wind.

Had I been close-hauled instead, the spray flying in my face, I might have gone with a modified version:

The cause of a sailboat’s motion is the wind.

Therefore a sailboat cannot sail against the wind.

If you aren’t a sailor, these arguments might sound plausible to you. I’m certain that to Aristotle’s contemporaries, who were limited in their sailing experience to square-rigged ships that ran before the wind with sails that caught the air like plastic shopping bags, they would have been very convincing indeed. Alas for the theory, a properly trimmed modern sailboat will merrily beat upwind, and given time and space to pick up speed can fly faster than the wind that powers it. So my syllogisms must contain a fallacy (can you figure out what it is?),2 and philosophy is once again BTFO.

This may seem like a silly example, but some of the science maximalists out there (guys like Lawrence Krauss) would tell you that it’s representative of nearly all encounters between philosophy and physics. The way these guys tell it, philosophy doesn’t even deserve to be called a mode of reasoning, because you don’t get anything out of it that you didn’t put in. All philosophical argumentation is like my failed argument about sailboats above, or like the adorably nonsensical reasoning that toddlers engage in, not so much hiding the ball as unable to see it. I don’t know anything about Kant, but I think in Kantian terms it amounts to denying that synthetic a priori judgements are possible. The only way to find out new things about the world is to go outside and look at it.

To be clear, this is not my view, but I get where it’s coming from. The last five hundred years have been rough for philosophy in general and for Aristotle in particular. Time and again, philosophers have put together a theory of causation based on the previous revolution in physics, convinced themselves that they’re not just curve fitting, but actually in touch with deep universal principles, and then had to watch their beautiful new theory get blown up by the next revolution in physics. I imagine that’s disheartening, but the time it happened to Aristotle was especially traumatic to philosophy and society, and I need to explain why that was before I can explain why the book I’m reviewing today is so cool.

Aristotelian science mattered far beyond ancient Greece: it dominated European and Arab thinking for over a thousand years. A key precept of Aristotle’s was that when trying to understand natural phenomena, final causes — the ends or the purposes toward which a phenomenon was directed — were the most fundamental answer to the question of “why” something happens. In particular, he argued that final causes held explanatory preeminence over efficient causes — the immediate or instantaneous source of an object’s motion.

There are domains where this point of view makes intuitive sense. For example, if somebody asks you, “why is the dog running?” they will probably be satisfied with the answer “because it wants to eat its food,” but will look at you funny if you say “because its legs are propelling it via contact with the ground.” We understand that dogs are goal-directed beings, so the explanation via final causes, the goals or ends of its action, seem like a better, more complete explanation than an answer referring only to brute mechanics.

The thing that sounds alien to modern ears is that Aristotle insists the same is true of simple material phenomena.3 If you ask why a stone falls, Aristotle thinks that “because the force of gravity is pulling it down” is a worse answer than “because it’s made of earth and therefore wants to be with the earth.” Aristotle isn’t a pantheist, he doesn’t actually think the stone is alive and wants something, it’s more like the entire universe is a holistic and organic system that is meant to be a certain way, and that evolves towards the thing that it’s ultimately meant to be, and that the stone falling towards the earth brings it a tiny step closer to that ultimate destiny.

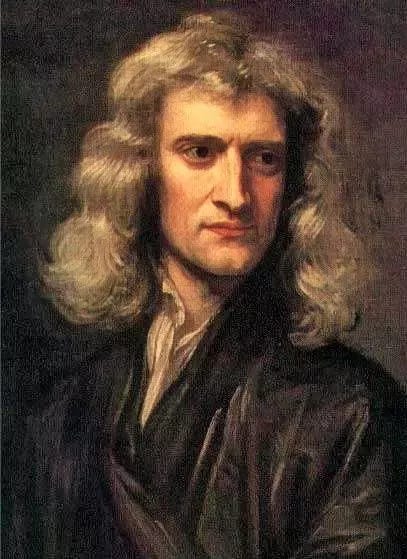

This is not generally the way people think today. In fact, it wasn’t the way people thought in ancient Greece either! Aristotle had many detractors who thought applying final causes to brute physical phenomena was ridiculous. But they lost, and Aristotle won, and his way of thinking dominated European thought for millennia. That all changed, finally, when Newton demonstrated that the motion of an object was totally and completely determined by the forces acting on it.

An Aristotelian might protest (and they did, and there are still a few cranks who do) that Newton’s contribution didn’t really add anything to the essential picture, all he offered was a more precise accounting of the efficient causes of an object’s motion. But this objection fails to grapple with the magnitude of the revolution that had happened. A pre-Newtonian account of efficient causes was essentially qualitative — people knew that the force imparted to a rock by your hand was what propelled it, and they knew that if you threw it harder, it went further, but that was it. What Newton offered was a precise computation that given some information about the force of the throw and the weight of the rock, told you exactly where it would land. But if those data were sufficient to tell you exactly where it would land, what need was there for anything else in the explanatory account? Suddenly those final causes look totally vestigial. You can say everything there is to say about the rock’s motion without taking them into account, and so Occam’s razor slices them away.

I’m a cynic about scientific progress and believe that mere correctness is no guarantee of a new theory’s triumph. But what made Newtonian physics unstoppable was the unprecedented degree of control over nature that it offered to its adherents. If knowing the forces on a rock allows you to predict exactly where it will land, then you will be able to aim your cannon much more accurately. Elegance and correctness are no guarantee of scientific triumph, but when believing the new theory imparts massive economic and military advantages, then there’s a good chance it will win. And so Newtonian mechanics swept everything before it, Aristotelian physics was dethroned from its multi-thousand year reign, and our picture of the universe completely changed.

It really did change completely. Before Newton’s revolution, people pictured the cosmos as a vast machine with a purpose, or at least a destination. The pieces of that machine had their own purposes, their own goals and destinies, and by evolving towards those destinies they aided and were aided by everything else. If you read my review of Needham’s Science in Traditional China, this is no doubt highly reminiscent of what he describes as an “organicist conception in which every phenomenon was connected with every other according to a hierarchical order.”

Newtonian mechanics turns this picture inside-out. It’s not just that it’s suddenly possible to think of the cosmos as a totally mechanistic collection of particles bouncing around according to brute arithmetic laws; what’s more shocking is that that picture notionally contains all the information you need to predict the entire future history of the universe.4 What’s scary and disruptive about this hyper-reductionist metaphysics is that it works so well that everything else — free will, divine intent, final causes, all of it — suddenly looks vestigial and begins to melt away.

Charles Taylor wrote a very long book about “disenchantment,” which is the secularizing process that made people stop thinking of the universe as being full of ghosts and demons and miracles and purposes, and start thinking of it as a collection of self-contained particles bouncing off each other. But he curiously underemphasizes the extent to which it was a scientific revolution that made that whole process intellectually plausible. We all know how that story ends — with quantum mechanics, Gödelian incompleteness, and the rest of it all filling the universe with ghosts again. But even the quantum universe and the relativistic universe are just elaborations on Newton’s “System of the World” and abide by its basic philosophical premise: that the universe is like a big computer that takes its current state, applies certain rules, and produces a next state. In fact this assumption is so pervasive, the revolution has been so thorough, most physicists cannot even form the thought that there could exist a conceptually well-defined alternative.

But actually, problems with the ultra-reductionist view appeared almost immediately, even while Newton was still alive. Consider Snell’s law. When light passes through the interface between one substance and another, it gets refracted or bent (this is why the world looks weird when you look at it through a transparent glass of water). Snell’s law tells you exactly how it gets refracted, it provides a direct functional relationship connecting the angle by which the light is bent and the properties of the two materials at the interface.

But look a little closer, and there’s something unsettling about Snell’s law. Light travels at different velocities in different media (the “speed of light” you’ve heard a lot about is actually just the speed of light in a vacuum), and it just so happens that the angles Snell’s law picks are always the ones such that for a given observer in the second medium, the path chosen minimizes the total time for light to travel to that point. Imagine a lifeguard trying to reach a drowning man who’s some distance out at sea and also some distance down the beach, and suppose the lifeguard can run faster on the land than he can swim in the water. There’s a particular diagonal route, and a particular spot at the water’s edge, which optimizes the time it takes for him to rescue the drowning guy. Here’s a diagram of that, and notice how it’s very similar to the above diagram of Snell’s law.

What’s unsettling is that while a lifeguard can look ahead, see where the swimmer is, then do some trigonometry and figure out the optimal route, light doesn’t “look” anywhere; it just follows brute physical laws and gets propelled along by effective causes. But in that case how does it “know” the exact angle at which to refract in order to minimize the total time? It doesn’t seem possible to make such a decision locally, without communicating information back in time, or pondering alternatives, or something. Or as Richard Feynman once put it:

The idea of causality… is easy to understand. But the principle of least time is a completely different philosophical principle about the way nature works. …it says this: we set up the situation, and light decides which is the shortest time, or the extreme one, and chooses that path. But what does it do, how does it find out? Does it smell the nearby paths, and check them against each other?

This sort of thing isn’t limited to optics. If you’ve read Ted Chiang’s wonderful Story of your Life or watched the slightly-less wonderful film Arrival that was based on it, then you’re probably aware that such principles have crept into the rest of physics. Or alternatively you may have heard some of the dumb popularizations of quantum mechanics that refer to a particle “trying out all possible paths simultaneously.” It took me an embarrassingly long time to figure out that this is actually just philosophically-illiterate science journalist-speak for precisely this sort of extremal principle or final cause.5

Anyway, I wanted to learn about this stuff, and I’m a firm believer that you can’t learn anything in physics without getting your hands dirty and solving some integrals. If you don’t live and breathe the math, then you’re in extreme danger of “string theory is like a taco”-syndrome. So I went and bought myself two books: the first was Goldstein’s mechanics, a doorstopper of a textbook chock-full of tricky problems. The second was the very different, but equally wonderful, book by Cornelius Lanczos that I’m reviewing. The subject of both of these books is Lagrangian mechanics, an 18th-century reformulation of Newtonian mechanics in terms of “global,” extremal principles. Or, if you want to upset a physicist, a reformulation in terms of final causes which proves that Aristotle was right all along.

I picked up Lanczos’ book because I had heard that it was Albert Einstein’s favorite physics textbook (Lanczos and Einstein were contemporaries and friends), but had no idea what to expect beyond that. Imagine my joy on opening the preface and reading these words:

Many of the scientific treatises of today are formulated in a half-mystical language, as though to impress the reader with the uncomfortable feeling that he is in the permanent presence of a superman. The present book is conceived in a humble spirit and is written for humble people…

The author is well aware that he could have shortened his exposition considerably, had he started directly with the Lagrangian equations of motion and then proceeded to Hamilton’s theory. This procedure would have been justified had the purpose of this book been primarily to familiarize the student with a certain formalism and technique in writing down the differential equations which govern a given dynamical problem, together with certain “recipes” which he might apply in order to solve them. But this is exactly what the author did not want to do. There is a tremendous treasure of philosophical meaning behind the great theories of Euler and Lagrange, and of Hamilton and Jacobi, which is completely smothered in a purely formalistic treatment, although it cannot fail to be a source of the greatest intellectual enjoyment to every mathematically-minded person. To give the student a chance to discover for himself the hidden beauty of these theories was one of the foremost intentions of the author.

That’s right, this is an early example of the Moore method, wherein students learn a subject by inventing it themselves under guided questioning. The most extreme examples of the method involve literally zero explanation or exposition besides a series of increasingly difficult questions (check out Iain Adamson’s General Topology Workbook for a great example of that), but Lanczos isn’t that extreme. He leaves you to mostly invent this field of science by yourself, but checks in occasionally to explain the trickiest points, or to drop a few paragraphs of beautiful philosophical contextualization.

The first step in the voyage of personal discovery that Lanczos wishes to guide you on is the invention of the calculus of variations. If you studied basic differential and integral calculus, you may remember that it contains a set of methods for determining the maxima, minima, and saddle points of functions. But if our goal is to invent a kind of physics where we posit that the universe is trying to pick overall trajectories that are “the best” or “most extreme” or “most stationary” in some sense, then we need something more abstract and more complicated than that basic calculus. A trajectory, a path through space-time or through phase space, is already a function. So we don’t want to find extremal points of functions, we want to find extremal points of functions of functions.

As it happens, in the 18th century people were already thinking about a special case of this problem. In the 1696 edition of Acta Eruditorum, Johann Bernoulli threw down the gauntlet:

I, Johann Bernoulli, address the most brilliant mathematicians in the world. Nothing is more attractive to intelligent people than an honest, challenging problem, whose possible solution will bestow fame and remain as a lasting monument. Following the example set by Pascal, Fermat, etc., I hope to gain the gratitude of the whole scientific community by placing before the finest mathematicians of our time a problem which will test their methods and the strength of their intellect. If someone communicates to me the solution of the proposed problem, I shall publicly declare him worthy of praise

…

Given two points A and B in a vertical plane, what is the curve traced out by a point acted on only by gravity, which starts at A and reaches B in the shortest time.

It sounds simple, but it’s anything but. The answer is not a straight diagonal line, because if the curve drops more steeply at the beginning then the particle picks up momentum more quickly and wins the race. But you also can’t drop too fast, like the blue curve in the animation below, because as you do it increases the total distance the particle has to travel (do you see how this is exactly analogous to Snell’s law and to the story about the lifeguard above?).

This became known as the brachistochrone problem, and it occupied the best minds of Europe for, well, for less time than Johann Bernoulli hoped. The legend goes that he issued that pompous challenge I quoted above, and shortly afterward discovered that his own solution to the problem was incorrect. Worse, in short order he received five copies of the actually correct solution to the problem, supposedly all on the same day. The responses came from Newton, Leibniz, l’Hôpital, Tschirnhaus, and worst of all, his own brother Jakob Bernoulli, who had upstaged him yet again.

What’s fun about this story is that if it’s true, then it provides us with a nice rank-ordering of the IQs of early 18th century scientists. He may have gotten all the responses on the same day, but back then letters took very different amounts of time to travel to different cities. So his brother Jakob, right next door in Italy, had weeks to work on the problem while Johan’s challenge slowly made its way to London and Newton’s response slowly made its way back. As it happens, one of Newton’s servants left a journal entry stating that one night the master arrived home from the Royal Mint, found a letter from abroad, flew into a rage, stayed up all night writing a response, and sent it out in the next morning’s post. If Newton really did solve in one night a problem that took Bernoulli weeks and Leibniz and l’Hôpital at least a few days, then this gives a sense of the fearsomeness of his powers. Newton’s own comment on the topic was simply: “I do not love to be dunned and teased by foreigners about mathematical things.”

The brachistochrone problem clearly fits the schema I described: each of the possible curves that you could draw from A to B is itself a function. But we aren’t trying to find a stationary or extremal point of those functions, instead we’re trying to find something more abstract: a stationary or extremal point in the space of all possible functions. With a little Socratic guidance from Lanczos it isn’t hard to find a set of conditions that must be true at such a point. These are the Euler-Lagrange equations, and the nice thing about them is that they work not only for the brachistochrone, not only for Snell’s law, but for any situation where you’re trying to find an extremal or stationary point in the space of all possible functions.

The payoff comes when you posit an arbitrary-seeming6 quality of a physical system called the “action” and ask what the world would look like if the universe were constantly trying to pick trajectories that were extremal or stationary with respect to this action. So you apply the Euler-Lagrange equations, and out pop Newton’s familiar formulae but from a totally different angle. It turns out that Newtonian mechanics just happens to be what particles have to do here and now, in order for the sum of all their activities to follow a particular global principle.

At first blush, it seems like all that happened was we did a bunch of hard math and then wound up right back at the same laws of physics we already knew. Sure, it’s cool that there’s this other way of conceptualizing them, but who cares, we didn’t get anything new out of this exercise. It’s also hardly a stunning philosophical comeback for final causes if the Newtonian interpretation is still sitting there looking equally as good. The fact that each approach implies the other doesn’t give us any grounds for choosing between them, and the modern skeptic will be inclined to stick with the Newtonian “universe is a big, deterministic computer that evolves from state to state” viewpoint that he already knows.

But the Lagrangian approach has a few things going for it. One of the first things you notice when sitting down to analyze a physical system in this new way is that the particular coördinates you happened to pick matter a lot less than they did when you were thinking in terms of Newtonian billiard balls bouncing around and imparting forces onto each other. When I was a schoolboy I despised exercises of the form “translate these equations of motion into a different reference frame” and so on. Part of why I hated them was that they were hard, but they also left a bad philosophical taste in my mouth. I didn’t know anything about relativity yet, but it was already obvious to me that the actual motion of a bouncing ball on a moving train shouldn’t depend on whether your coördinate system was at rest relative to the train or relative to the platform. The trouble was that in the Newtonian analysis, this fact, which seemed to me like a foundational truth about the universe, emerged from a mass of tortuous algebra and clung to being by the skin of its teeth. I had no doubt that the coördinate invariance would emerge, somehow, but I hated the fact that there was nothing in the equations that showed why it obviously had to be so, given that it obviously had to be so.

When one switches to the Lagrangian approach, the particular coördinate system you use becomes almost totally arbitrary. This has practical benefits, because it means you can pick coördinates that are convenient for your purpose. But it’s also philosophically satisfying, because the universe just is the universe, however we choose to describe it. And there’s another entirely different benefit, closely related to this coördinate invariance. I’ve written before about the importance of symmetries in the laws of physics, and about the great discovery that every such symmetry gives rise to a conserved quantity. The operation of that correspondence between symmetries and conservation laws is through the Lagrangian, and when you have a system described by a Lagrangian, you can often just read those symmetries right off the page.

All of this is circumstantial evidence that the Lagrangian approach is the “real” description of nature, and that the Newtonian picture of particles bouncing around and reacting to effective causes is an approximation that we happened to stumble upon first. That approximation then seized the imagination of Enlightenment Europe, and commands the allegiance of scientists down to this day. But look how historically contingent that is! If we had discovered the Lagrangian formalism first, would we ever have bothered with the Newtonian approach with its messier math, lack of coördinate invariance, and dissonance with the still-reigning worldview of the great Aristotle?

Something else that sticks in my craw about the Newtonian philosophical worldview is that there’s something awfully… anthropic about it. The way human beings solve math problems, and especially the way human beings program computer simulations of physical systems, is precisely by taking an initial state and then evolving it according to a deterministic set of rules. It is very much not the Lagrangian approach, which Max Planck once described as “the actual motion at a certain time is calculated by means of considering a later motion.” But doesn’t that mean the Newtonian philosophy just amounts to saying that the universe behaves exactly the way human beings solve physics problems? Isn’t that one of these extremely “geocentric” philosophical premises that celebrity physicists constantly mock when a religious weirdo is doing it?

I used to think there was a valid quasi-anthropic argument that a Newtonian die-hard could still make: namely that given that we live in a universe with coördinate invariance and with many sorts of symmetries, it was inevitable that they would admit a description in terms of Lagrangians and “imaginary” final causes. This possibility troubled me for a while, but then I mentioned it to a friend who’s an actual theoretical physicist, and he pointed out to me that my math was wrong. It’s true that any laws of physics expressed in terms of finding the stationary point of an action principle will necessarily give rise to equations of motion that are coördinate invariant, deterministic, reversible, have the proper symmetries, all that good stuff. But the implication doesn’t work in the other direction — you can dream up equations of motion that describe a sensible universe and that are covariant in all the right ways, but which cannot be interpreted in teleological form as the consequence of stationarizing a function of the world’s overall trajectory.

All of which makes it much, much more curious that our universe does work this way. And it isn’t just classical mechanics. If you crack open a textbook on relativistic electrodynamics, or even general relativity itself, they pretty much all start with: “let’s guess at a convenient Lagrangian, oh look it gives us the correct equations of motion.” In fact, this is pretty much the real-world story of how relativity was invented. Einstein started by positing the simplest equations of motion that could possibly work (the so-called “Entwurf” theory), and spent years going down a blind alley. Hilbert instead postulated the simplest possible Lagrangian, and out popped the correct equations of motion, enabling him to nearly scoop Einstein. (A wonderful book about this is Einstein’s Unification).

Are we supposed to think that this is just chance? I’m a firm believer, with Feyerabend, that you can learn a lot more from scientists by watching what they do than by listening to what they say. In this case they tell you that the universe is just stuff bouncing into other stuff, then they go off to their labs and start dreaming up teleological laws about how the cosmos is harmoniously evolving along a path that only makes sense when the past and the future are viewed as one. I once watched a lecture by Leonard Susskind in which, in a moment of candor, he admitted that physicists these days don’t even bother with laws of motion until they have a Lagrangian they like. So they’re so used to these things working, they literally assume all future laws will take this shape.

Most physicists will use Lagrangians to do their calculations, and then shout until they’re hoarse that it doesn’t mean anything at all, it’s just a mathematical trick, the universe really is just stuff bouncing into other stuff with no ultimate destination. But of course there’s another possibility, even if it’s one they’re unwilling to consider. Perhaps the universe is not a computer, perhaps its origin and its conclusion are fixed, and it speeds along a trajectory between the two that, when all possible histories are considered, is the only one it could have, and not one sparrow will fall to the ground without its care. Thinking about the universe this way would entail a philosophical revolution, one which our society isn’t ready for. But the history of scientific progress traces not a line, but a spiral, and the deep past may contain the seeds of our intellectual future.

So perhaps I will stop trolling the Aristotelians. Even if they deserve it.

This is why the only kind of speculative theology I endorse is apophatic. It’s just too easy to fool yourself with any kind of “positive” theology, unless it’s totally rooted in scripture or another source of direct revelation.

If you figure those ones out, here’s a harder one: every sailor knows that a sailboat “creates its own wind.” By Galileo’s principle of relativity, the force of wind experienced by the boat is the sum of the wind it would experience at rest plus the boat’s own motion. If the boat is sailing against the wind, this means that the “apparent” wind — the wind from the point of view of those on the boat — is stronger than the actual wind. But it’s the apparent wind that imparts energy to the boat’s sails, and whose direction matters for trimming, points of sail, etc. So putting all of that together, why isn’t a sailboat a perpetual motion machine?

I’ve read just enough Aristotle to be dangerous, but I think if we want to get technical the dog’s desire for food isn’t its final cause. The final cause would be something more like: “because it is the good of a dog to eat” or “because dogs are meant to eat”. This sounds a bit weirder to modern ears than the purposive explanation I gave in the example. But applying it to goal-directed beings still sounds a thousand times less weird than applying it to things like stones and electrons.

Well, that’s what a lot of people thought anyway, but it turns out it isn’t true. No, I don’t mean because quantum mechanics (maybe, depending on interpretation) makes the universe nondeterministic again. I mean because Newtonian mechanics itself isn’t deterministic!

In some cases, it really is just that the universe picked a trajectory that optimized something that couldn’t be known “locally”, and the journalist can’t conceive of anything but effective causality, so asserts that it “tried everything out”. In other cases, we can actually recover the extremal principle or teleological-seeming law by imagining that the various trajectories are interfering with each other (you can do this with Snell’s law using Huygens’ principle).

A friend of mine who’s an actual theoretical physicist assures me that the action isn’t arbitrary at all, but his explanation was full of phrases like “tachyonic instability” and my eyes glazed over. Then he told me to read this paper, which I still have not done.

I think it’s worth noting that, like with a lot of things, calculus of variations requires defining a set of boundary conditions on which to derive the Euler Lagrange equations. Donald E Kirk’s Optimal Control theory has the best exploration of the consequences of this for various derived optimization problems. But the Newtonian style formulation is a differential equation, where the boundary conditions are NOT inherent in the expression of the equations of motion, they are ad hoc and specific to the particular problem.

What I’m trying to say is that the teleological approach of Lagrangian mechanics already assumes a fixed beginning and end, where the Newtonian formulation does not. Lots of physical laws do this, Maxwell’s equations have an integral and a differential form, where the integral equations (a global, teleological form) have the boundary conditions embedded in them, and the differential ones (a local, more obviously causal form) do not. So it is circular reasoning to look at the Lagrangian, global formulation and discover that this implies a fixed endpoint, this is in fact an assumption of the method, not a consequence.

OMG Conan and physics. I'm now an unpaid subscriber*, so a shout out to the least action principle in the Feynman lectures. https://www.feynmanlectures.caltech.edu/II_19.html

*which kinda stinks, I don't have enough income to support all the substacks I read, and I hate subscribing 'cause it means more stuff in my email inbox. Idea for substack, charge me ~$100 a year and I get to 'support' ~10 substacks, and also not get any emails, maybe one summary email/ week